How to Evaluate AI Features in Marketing Tools

"A lot of demos look impressive, but they fall apart outside a perfect setup," says Leah Miranda, Head of Demand Gen & Lifecycle at Zapier.

Miranda has evaluated enough AI-powered tools to recognize the pattern. The demo environment is pristine. The data is complete. The use case is straightforward. Everything works beautifully. Then you deploy it with real marketing data, and the experience doesn't match.

This gap between demo and deployment has become the central challenge of AI tool evaluation. 73% of marketing ops professionals are actively using, testing, or experimenting with AI tools. 61% plan to invest in AI and ML-based tools. The demand is there. The ability to distinguish genuine capability from marketing claims lags behind.

The good news: the evaluation framework isn't complicated. It starts with asking the right questions and recognizing the red flags.

Start with AI outcomes, not AI features

The most common mistake in AI tool evaluation is leading with features. The vendor shows you capabilities. You get excited about what the AI can do. You forget to ask whether what it can do aligns with what you need done.

Flip the sequence.

Step one: define your business outcomes

Before any demo, articulate the specific results you're trying to achieve. Not "use AI for email marketing" but "reduce email production time from two weeks to three days" or "increase personalization coverage from 10% of sends to 80% without adding headcount."

Business outcomes should be:

- Measurable (you can tell if you achieved them)

- Specific (not general efficiency improvements)

- Time-bound (achievable within a reasonable evaluation period)

Step two: identify 3-5 primary use cases

From those outcomes, define the specific use cases the AI needs to support. Be concrete:

Vague Use Case | Specific Use Case |

|---|---|

AI for content | Generate first-draft subject lines for weekly promotional sends |

AI for personalization | Create segment-specific copy variants for 12 audience segments |

AI for analytics | Identify underperforming campaigns within 24 hours of send |

Vague Use Case | AI for content |

|---|---|

Specific Use Case | Generate first-draft subject lines for weekly promotional sends |

Vague Use Case | AI for personalization |

|---|---|

Specific Use Case | Create segment-specific copy variants for 12 audience segments |

Vague Use Case | AI for analytics |

|---|---|

Specific Use Case | Identify underperforming campaigns within 24 hours of send |

Step three: evaluate against those use cases

Now you have a framework. The demo should prove the tool can deliver your specific use cases, not impressive capabilities you won't use.

"Test how well specific AI features advance your marketing goals," rather than getting distracted by features that solve problems you don't have.

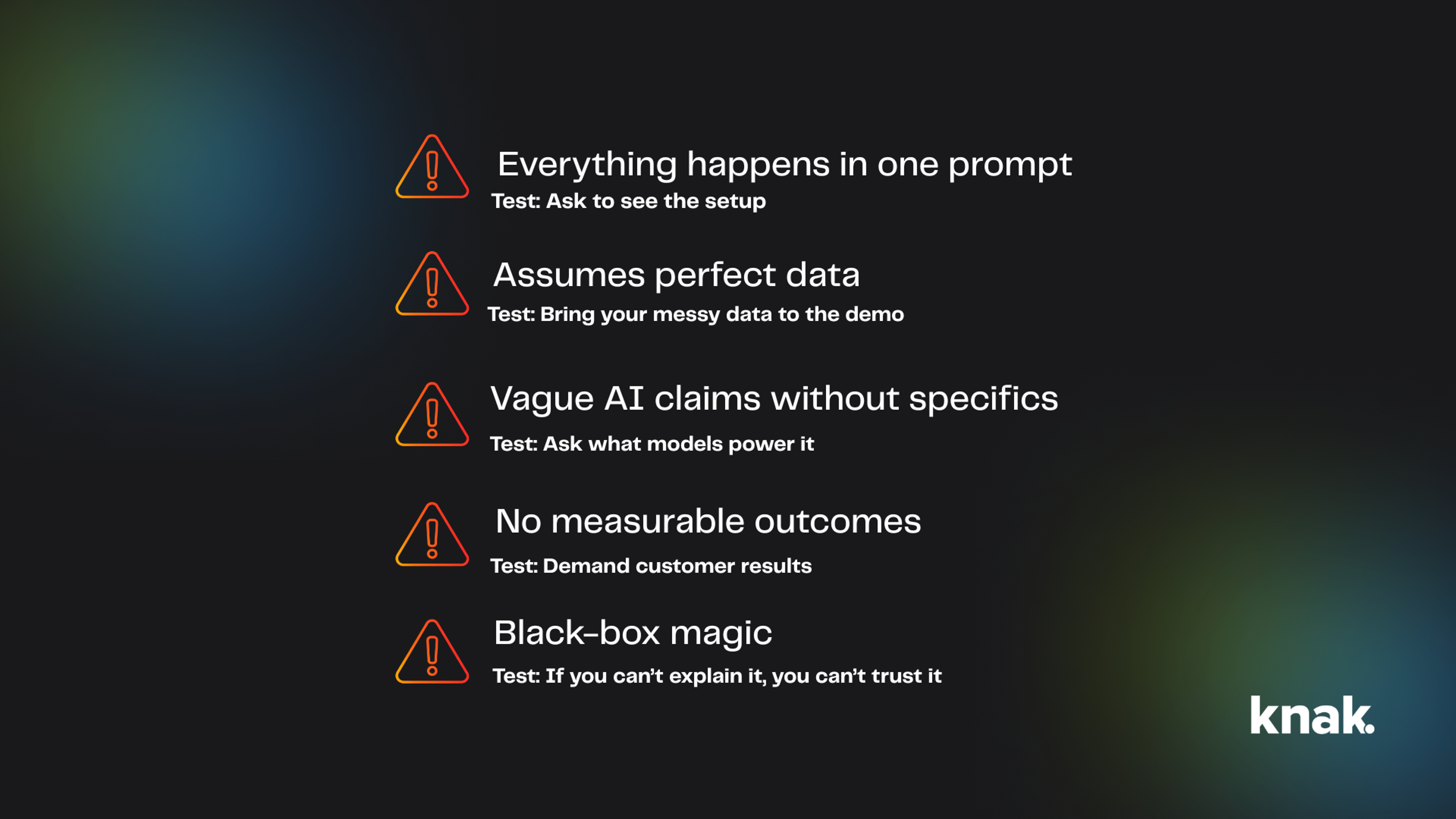

Red flags in AI tool demos

Miranda's experience identifies specific patterns that signal problems.

Red flag one: Everything happens in one prompt

"If research, decisions, and execution all come from a single prompt, that's not an agent. That's just a response."

This applies beyond agents to AI features generally. When a demo shows complex outcomes from minimal input, ask what's happening behind the scenes. Often, the demo is showing a happy path that requires significant setup or human intervention to achieve in practice.

The test: ask the vendor to show you the setup. How much configuration was required to make that demo work? How much will you need to maintain?

Red flag two: Assumes perfect data

"Let's be honest, marketing data is messy. That's normal."

Miranda is direct: "If a demo only works when every field is clean and complete, it's not realistic."

Marketing data has gaps. CRM records are incomplete. Segment definitions overlap. Historical data has inconsistencies. Any AI tool that requires perfect data to function isn't ready for enterprise marketing environments.

The test: bring your own data to the demo. Not your cleanest data, your realistic data. How does the tool handle missing fields? Conflicting values? Unexpected formats?

Red flag three: Vague AI claims without specifics

"AI-powered" has become meaningless from overuse. Every tool claims AI. Few explain what that means.

Questions that probe beyond the buzzword:

- What specific AI models or algorithms power this feature?

- What data does the AI train on or use?

- Is the AI generating original output or selecting from pre-defined options?

A vendor who can't answer these questions clearly either doesn't understand their own technology or is obscuring limitations.

Red flag four: No measurable outcomes

When asked about results, some vendors pivot to roadmaps. Others offer vague claims about efficiency improvements. Neither is sufficient.

Demand specifics:

- Can you show measurable outcomes from existing customers?

- What metrics improved and by how much?

- How long did it take to see those results?

No customer results means you're the beta tester. That's a valid choice if you go in with eyes open, but it's not what most buyers expect.

What measurable outcomes look like in practice: OpenAI's marketing operations team reported that Knak's AI capabilities produce 80–90% complete email drafts from briefs and documents, compressing production time from weeks to minutes. That's the kind of specific, verifiable claim that separates real capability from demo magic.

Red flag five: black-box magic

Some vendors treat their AI as proprietary secret sauce that can't be explained. This creates problems beyond intellectual curiosity.

If you can't understand how the AI makes decisions, you can't:

- Debug when it produces bad outputs

- Explain decisions to stakeholders

- Build governance around appropriate use

- Trust it with anything consequential

Transparency about capabilities and limitations signals maturity. Opacity signals either immaturity or something to hide.

Red flags in AI tool demos

The "agent washing" phenomenon

The term "agent washing" describes vendors claiming AI agent capabilities without substance, slapping "AI agent" on features that don't meet any reasonable definition of agentic.

The pattern is familiar from previous technology hype cycles. Everything became "cloud" for a while. Then "machine learning" appeared in every product description. Now "AI agent" is the label that closes deals.

Recognizing agent washing:

What They Say | What It Often Means |

|---|---|

"AI-powered workflows" | Rule-based automation with GPT bolted on |

"Intelligent agents" | Chatbot with a new name |

"Autonomous campaign optimization" | A/B testing that ran automatically |

"AI that learns from your data" | Basic analytics with AI branding |

What They Say | "AI-powered workflows" |

|---|---|

What It Often Means | Rule-based automation with GPT bolted on |

What They Say | "Intelligent agents" |

|---|---|

What It Often Means | Chatbot with a new name |

What They Say | "Autonomous campaign optimization" |

|---|---|

What It Often Means | A/B testing that ran automatically |

What They Say | "AI that learns from your data" |

|---|---|

What It Often Means | Basic analytics with AI branding |

Not all of these are bad products. Many deliver genuine value. The problem is the mismatch between claim and reality. If you're buying an "AI agent" and getting a chatbot, you'll be disappointed even if the chatbot is useful.

The fix is straightforward: ask vendors to define their terms. What specifically makes this an agent versus an assistant versus an automation? What does it do without human prompting? What can't it do?

Questions AI vendors don't expect

The best evaluation questions are ones the vendor hasn't rehearsed answers for. These reveal actual capabilities rather than polished positioning.

On capabilities:

- What does this AI NOT do well?

- What use cases have customers tried that didn't work?

- When should I NOT use this feature?

Vendors who can answer these questions honestly understand their product. Those who insist everything works perfectly are either uninformed or dishonest.

On constraints:

- How is the AI constrained or governed?

- What prevents it from generating inappropriate outputs?

- How do you handle brand voice consistency?

AI without constraints is AI that will embarrass you eventually. Understanding the guardrails is as important as understanding the capabilities.

On data:

- What data does this feature require to function?

- What happens when that data is incomplete?

- Is any data sent to external systems or used for training?

Data questions reveal both practical requirements and privacy implications. 52% of marketers view data privacy as the primary challenge when adopting AI. Your security and legal teams will ask these questions. Better to have answers before you're deep in procurement.

On integration:

- How does this connect to my existing tools?

- What manual steps remain even with the integration?

- How does data sync? Real-time, batch, manual?

89% of marketing ops professionals say integration capability is their top priority for new technology. AI features that don't integrate with your stack create new silos rather than solving existing ones.

Mature vs experimental AI capabilities

Not all AI capabilities are equally proven. Understanding where different applications fall on the maturity curve helps calibrate expectations.

Mature capabilities (proven value, predictable results):

Predictive analytics. AI that identifies patterns in historical data to forecast future performance. This technology has years of refinement and clear measurement criteria.

Basic personalization. Product recommendations, send time optimization, subject line selection from proven variants. The AI is making choices from defined options based on data, not generating novel content.

A/B testing optimization. AI that determines winning variants and allocates traffic accordingly. Well-understood problem with established solutions.

Sentiment analysis. Classifying text as positive, negative, or neutral. Mature technology with clear limitations (sarcasm, context) that are well-documented.

Emerging capabilities (showing promise, results vary):

Content generation. AI can produce drafts that require human editing. The gap between draft and publishable varies by use case, tool, and how much context the AI has access to. 49% of email marketers use AI to generate campaign content, but with human review. The key is streamlining workflows with AI rather than replacing human judgment entirely.

Advanced personalization. Dynamic content that adapts to individual recipients in real time. The technology works; the data requirements and governance complexity often don't.

Insight generation. AI that doesn't just report metrics but explains what's happening and suggests actions. Promising but inconsistent, heavily dependent on data quality.

Experimental capabilities (interesting potential, deploy carefully):

Autonomous campaign execution. AI that creates, deploys, and optimizes campaigns without human approval. The technology exists in demos. The trust and governance required for production use don't.

Full content creation without review. AI-generated content that publishes directly. High risk given hallucination rates and brand consistency requirements.

Complex multi-step workflows. Agents that orchestrate actions across multiple systems based on autonomous decisions. Early days, significant integration and reliability challenges. Though the direction is clear: OpenAI's own marketing operations team envisions campaign creation as a coordinated system of AI agents — one for planning, one for creation, others for data, audiences, and optimization — with humans steering strategy.

Enterprise evaluation criteria

Beyond feature evaluation, enterprise buyers need to assess vendors on dimensions that affect long-term success.

Integration depth

Surface integration (API exists) differs from deep integration (bidirectional sync, real-time updates, workflow triggers). Understand exactly how the AI tool will connect to your MAP, CRM, and content systems. What data flows where? What manual steps remain? Vendors who approach AI thoughtfully can articulate this clearly.

Security and governance

- What security certifications does the vendor hold?

- How is customer data isolated?

- What audit trails exist for AI actions?

- Can you control what data the AI accesses?

Over 70% of marketers have encountered AI-related incidents. Governance isn't optional.

Scalability and performance

- How does the AI perform with your data volume?

- What are the latency characteristics?

- Are there rate limits or usage caps?

Demo environments rarely reflect production scale. Get specifics on performance at your anticipated volume.

Support and implementation

- What implementation support is included?

- What ongoing training is available?

- What's the typical time to value?

AI tools often require more configuration and tuning than traditional software. Understand what support you'll get.

Vendor stability

The AI landscape is volatile. Startups get acquired or run out of funding. Large vendors deprecate features. Evaluate:

- How long has the vendor been in market?

- What's their funding situation (if applicable)?

- What's their customer retention rate?

Building workflows around AI tools that disappear creates more problems than the tools solved.

Running an effective AI evaluation

With framework in hand, the practical evaluation process follows a clear sequence.

Phase one: Requirements definition

Before talking to vendors:

- Define business outcomes (what you're trying to achieve)

- Identify primary use cases (how AI will help)

- Document data availability (what you can provide)

- Clarify integration requirements (what systems must connect)

- Establish governance requirements (what controls you need)

- Map your existing marketing campaign workflows to identify where AI fits

Phase two: Initial vendor screening

Using your requirements:

- Request demos focused on your specific use cases

- Ask the uncomfortable questions (limitations, failures, data handling)

- Bring realistic data to the conversation

- Include stakeholders who will actually use the tool

Phase three: Proof of concept

For serious contenders:

- Run a limited pilot with your actual data

- Measure against the outcomes you defined

- Document the implementation requirements

- Assess the support quality

- Calculate realistic total cost of ownership

Phase four: Decision

With proof-of-concept data:

- Compare actual results to vendor claims

- Assess fit with team capabilities and workflows

- Calculate ROI based on real performance

- Make decision based on evidence, not demos

Beyond the AI evaluation

The evaluation process reveals something important: AI tool effectiveness depends as much on your readiness as on the tool's capabilities.

59% of marketing ops teams lack AI and automation expertise. The best AI tool in the hands of a team unprepared to use it delivers less value than a modest tool with a capable team.

Parallel to tool evaluation, assess:

- Does your team have the skills to configure and maintain AI tools?

- Do you have the data infrastructure AI features require?

- Have you established governance for AI use in marketing?

- Do you have executive support for the change management required?

AI tools amplify what's already there. They amplify good data and good processes. They also amplify bad data and broken workflows.

The path forward

Every marketing tool now claims AI capabilities. Most of those claims deserve skepticism. The framework for separating substance from hype isn't complicated:

Start with your business outcomes, not their feature list. Ask the questions they haven't rehearsed. Request the messy demo, not the polished one. Evaluate based on evidence from proof of concept, not promises from sales.

The vendors who survive this scrutiny deserve your consideration. The ones who can't answer basic questions about limitations, data handling, and governance reveal themselves in the process.

Explore Knak's AI capabilities with a demo tailored to your use cases.