Blog

How Knak Does Email: Email Testing At-a-Glance

By

Tania Blake

Published Nov 7, 2019

Summary - Optimize email campaigns with A/B testing. Discover the power of testing subject lines, CTAs, formats, and more. Improve engagement to get ahead of competitors.

If you’re an email marketer, you may have some questions:

Should I put that heart-eyes emoji in the subject line of my email or leave it out?What format is best for my content?Will that GIF give me better click-thru rates?Should my CTA say, “Download Now” or “Get the Facts”?Should my CTA button be blue or green?And while we’re at it, should my CTA button be at the top or bottom of my email?

Fortunately, there’s a great way to find out the answer to all of those questions. It’s called A/B testing, and if you’re not doing it, it’s time to start.

We’re going to take an in-depth look at email testing in the coming months, so consider this A/B Testing 101, and read on for some of the basics.

What is A/B testing?

A/B testing in emails, also known as split testing, is the process of sending one variation of an email to a subset of your subscribers and another variation to another subset to see which performs better.

The “A” refers to the control group, and the “B” refers to the challenger, or test, group.

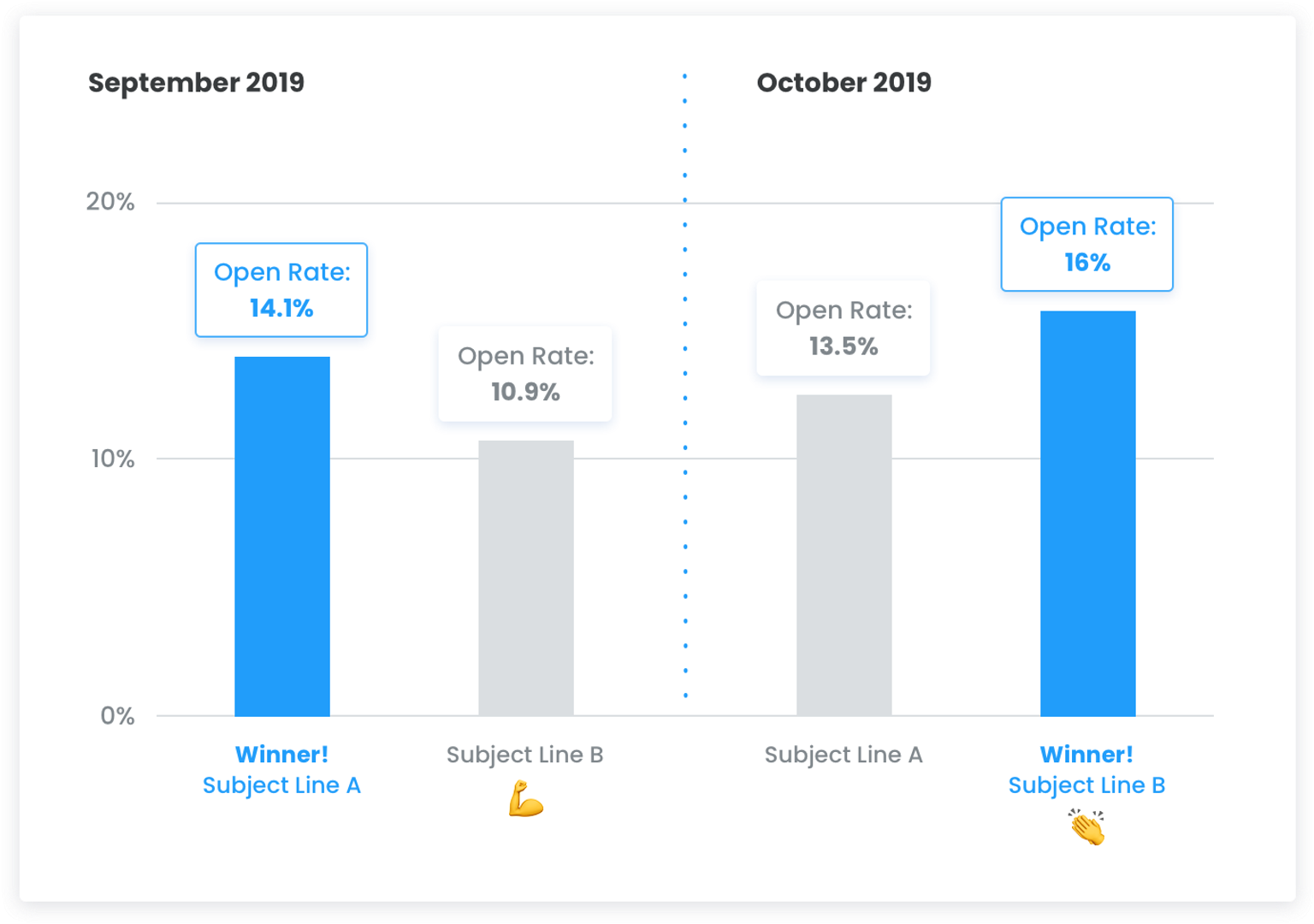

Here’s a real-life Knak example:We launched our Knak newsletter recently. Beginning with our September edition, we wanted to test the efficacy of including an emoji in the subject line.

We set up the test in Marketo to send the newsletter with the emoji to 25% of our subscribers and the newsletter without the emoji to another 25%. The test ran, and at the end it, Marketo sent the email with the higher-performing subject line to the rest of our subscribers.

(The results? As you can see below, in September, the subject line without the emoji performed better. In October, the winner was the one with the emoji. Stay tuned for the tiebreaker …)

Why do I need to do A/B testing?

If you aren’t doing A/B testing, you’re losing valuable insight into the marketing tactics that resonate with your audience. Over time, those insights translate into dollars.

Gain the insight ⇒ turn it into better campaigns ⇒ reap the benefits.

If you don’t have the insight, your campaigns are at the mercy of your marketing team’s best guess, and you won’t have the benefit of hard data to support those guesses.

Our recent Email Benchmark Report notes that only 46% of those we surveyed say they regularly conduct A/B testing. That means 54% of email marketers are leaving a wealth of information on the table.

Join the minority here. A/B test your campaigns, and get a leg up on your competition.

Ok, let’s make it happen.

Here are some A/B testing dos and don’ts to help you get the most accurate results:

- Don’t test more than one thing at a time.We know you want to see which subject line/emoji/CTA/format/font color works best, but you have to stick with one thing at a time, or you won’t know which piece is influencing which result.

- Do send both tests at the same time.The time of day that your email is sent impacts your open rates. Don’t weigh a green CTA button sent at 4 PM on Sunday against a blue CTA button sent at 8 AM on Monday.(Hint: email send time is a great thing to A/B test on its own. )

- Do set goals for your tests.You need to know what you hope to gain from your A/B testing. Are you trying to improve open rates? Click-thru rates (the ratio of “clickers” to email openers)?The metrics you’re trying to improve will dictate which elements you’re testing, so channel your inner middle-school science student and start hypothesizing. Finding out if what you think will work is actually what does work is a great learning exercise for your team.

- Do test more than once.If you truly want accurate results, you need to test and test again. If we had only tested our emoji-in-the-subject-line once, we would have thought it was ineffective. But since the results the next month showed something different, we know it’s worth exploring further.

- Don’t ignore the results.Collect the data and analyze it! Keep track of your results and use them to improve your campaigns. Do the results line up with your hypotheses? Do they support the types of campaigns you’re already sending? Do you need to consider tweaking your design? Insight like this is incredibly valuable and can have a lasting impact on your campaign results (and therefore your bottom line).

Help!

Marketo, Eloqua, and most other marketing automation software have built-in A/B testing capabilities. If you need help setting it up (or figuring out how to use it!), we can help!

After all, we’re marketers too, and we’d love to welcome you to the 46% of us who are improving our open and click-thru rates with A/B testing.

Looking for inspiration for your next email campaign? Check out our Email Gallery to see 50+ high-converting enterprise email examples spanning over 10+ campaign categories including Content Download Emails, Product Update Emails, Survey Emails, and more.